Submitted by Jacob Weiskopfh Ph.D on

Submitted by Jacob Weiskopfh Ph.D on

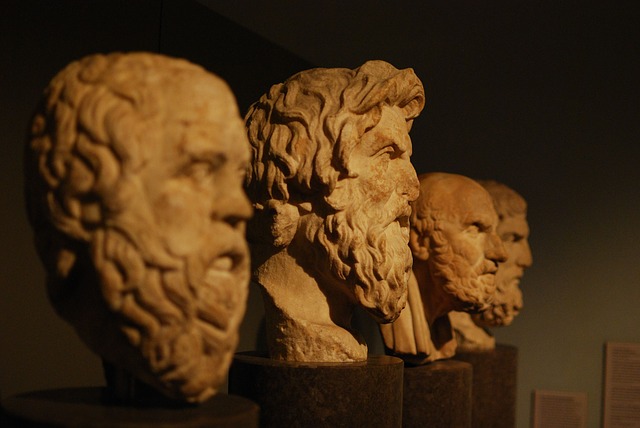

Image by morhamedufmg from http://Pixabay.com

If we were to create, or become, post- or transhuman superintellects, would all important philosophical questions be answered?

You might think so. If fundamental philosophical questions about knowledge, value, meaning, and mentality aren't entirely hopeless -- if we can make some imperfect progress on them, even with our frail human intellects, so badly designed for abstract philosophical theorizing -- then presumably entities who are vastly intellectually superior to us in the right dimensions could make more, maybe vastly more, philosophical progress. Maybe they could resolve deep philosophical questions as easily as we humans can solve two-digit multiplication problems.

Or here's another thought: If all the facts of the universe are ultimately facts about microphysics and larger-level patterns among entities constituted microphysically, then the main block to philosophical understanding might be only the limits of our observational methods and our computational power. Although no superintelligence in the universe could realistically calculate every motion of every particle over all of time, maybe all of the "big picture" general issues at the core of philosophy would prove tractable with much better observational and calculational tools.

And yet...

I want to say no. Philosophy never could be fully "solved", even by a superintelligence. (It might end, of course, in some other way than being fully solved, but that's not the kind of end Xia or I had in mind.)

First reason: Any intelligent system will be unable to fully predict itself. It will thus always remain partly unknown to itself. This lack of self-knowledge will remain an ineradicable seed for new philosophy.

To predict its own behavior a system will require a subsystem or subprocess dedicated to the task of prediction. That subsystem or subprocess could potentially model all of the entity's other subsystems and subprocesses to an arbitrarily high degree of detail. But the subsystem could not model itself in perfect detail without creating a perfect model of its modeling procedures. But then, to fully predict itself, it would need a perfect model of its perfect model of its modeling procedures, and so on, off into a vicious infinite regress.

Furthermore, some calculations are sufficiently complicated that the only way to predict their outcome is to actually do them. For any complex cognitive task, there will (plausibly) be a minimum amount of time required to physically construct and run the process by which it is done. If there is no limit to the complexity of some problems, there will also (plausibly) be no limit to the minimum amount of time even an ideal process would require to perform the cognitive task, even if the cognitive task is completable in principle. Therefore, given any finite amount of time to construct the prediction there will always be some outcomes that a superintelligence will be unable to foresee.

Now even if you grant that no superintelligent system could fully predict itself, it doesn't straightaway follow that philosophical questions will remain. Maybe the only sorts of questions that escape the superintelligence's predictive powers are details insufficiently grand to qualify as philosophical -- like the 10^10^100th digit of pi?

No, realistically, the actual self-predictive power of any practical superintelligence will always fall far, far short of that. As long as it has some challenging tasks and interests, it won't be able to predict exactly how it will cope with them until it actually copes with them. It won't know the outcome of its mega-intelligent processes until it runs them. So it will always remain partly a mystery to itself. It will be left to wonder uncertainly about what to value and prioritize in light of its ignorance about its own future values and priorities. I'd call that philosophy enough.

Second reason: No amount of superintelligence can, I suspect, entirely answer the question of fundamental values. I don't intend this in any especially mysterious way. I'm not appealing to spooky values that somehow escape all empirical inquiry. But it does seem to me that a general-capacity superintelligence ought always be able to question what it cares about most. A superintelligence might calculate with high certainty that the best thing to do next, all things considered, would be A. But it could reopen the question of the value weightings that it brings to that calculation.

Again, we face a kind of regress. Given values A, B, and C, weighted thus-and-so relative to each other, A might be clearly the best choice. But why value A more than C? Well, the intelligence could do further inquiry into the value of A -- but that inquiry too will be based on a set of values that it is at least momentarily holding fixed. It could challenge those values using still other values....

The alternative seems to be the view that there's only one possible overall value system that a superintelligence could arrive at, and that once it has arrived there it need never reflect on its values again. This strikes me as implausible, when I think about the diversity of things that people value and about how expanding capacities and experience increase that diversity rather than shrink it. As new situations, opportunities, and vistas open up for any being, no matter how intelligent, it will have new occasions to reflect on changes to its value system. Maybe it invents whole new forms of math, or art, or pleasure -- novel enough that big questions arise about how to weigh the pursuit of these new endeavors against other pursuits it values, and unpredictable enough in long term outcome that some creativity will be needed to manage the uncertain comparison.

No superintelligence could ever become so intelligent as to put all philosophical questions permanently to rest.

Eric Schwitzgebel

http://schwitzsplinters.blogspot.com/2019/06/will-philosophy-ever-come-to-end.html

- 348 reads